Facebook is starting to implement a new system in which it will give users a credibility score to rate users’ trustworthiness on the social network in an effort to stop the spreading of fake news.

According to a detailed report by The Washington Post, the Facebook trustworthiness rating is a system gives users a “reputation score” and give users a score from zero to one. The report did not indicate if the score would be just the two numbers zero or one, or if the rating would be in increments in tenths (0.1, 0.2, 0.3…).

The Washington Post reports that the reputation rating system has been in effect over the past year and is part of Facebook’s enhanced methods to fight against fake news stories and content that violates Facebook’s Community Standards.

Facebook trustworthiness rating system

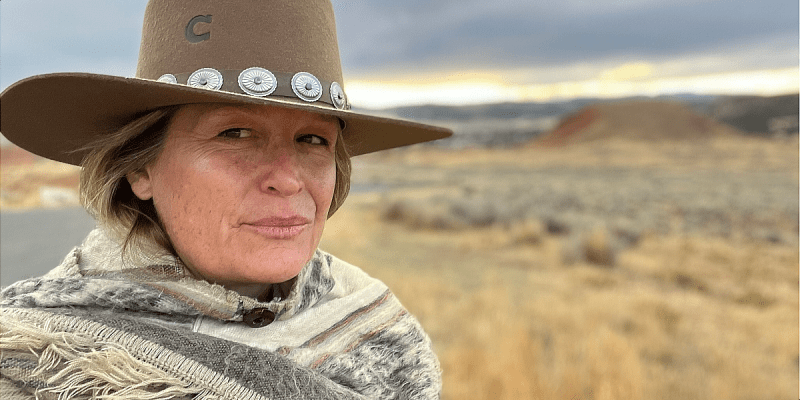

Facebook product manager Tessa Lyons told The Washington Post that the social network has been using information provided by its users to help quell the spread of misinformation on Facebook. Whenever you flag a post as spam or malicious or report a friend request you believe is phony, Facebook will assign you a rating based partly on how accurate you have been in flagging or reporting fake or malicious posts or spotting phony Facebook accounts. According to the Washington Post, the trustworthiness score is one of the thousands of different “behavioral clues” used to help Facebook understand what it needs to dig deeper into to determine if flagged content violates its policies.

“One of the signals we use is how people interact with articles,” said Lyons to The Washington Post in an email. “For example, if someone previously gave us feedback that an article was false and the article was confirmed false by a fact-checker, then we might weight that person’s future false-news feedback more than someone who indiscriminately provides false-news feedback on lots of articles, including ones that end up being rated as true.”

The report also notes that Facebook did not share all the information will use to help determine what a user’s trustworthiness rating would be. If Facebook did share what criteria is used to help create a user trustworthiness rating, those who use the social network with the intent to spread misinformation or malice would develop new ways to game the system so they could be perceived as trustworthy in the eyes of Facebook.

In the age of algorithms and other processes used to determine fake news on social networks, Facebook is trying to use the data it collects from you each time you engage with a post to help fight against fake news. So, the more you judge the content you consume on Facebook, just know that Facebook is using your engagement to judge you. Depending on your opinions about the social network, this could be a step in the right direction to help prevent the spread of disinformation across its platform to its 2.23 billion active users.