By David Shepardson and Diane Bartz

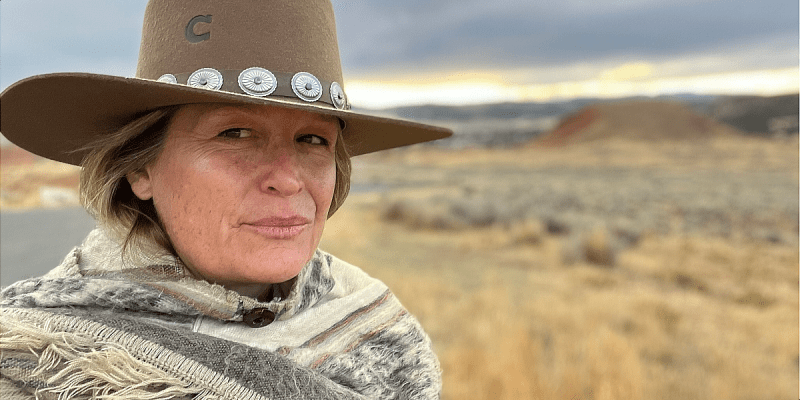

WASHINGTON (Reuters) -Former Facebook employee and whistleblower Frances Haugen will urge the U.S. Congress on Tuesday to regulate the social media giant, which she plans to liken to tobacco companies that for decades denied that smoking damaged health, according to prepared testimony seen by Reuters.

“When we realized tobacco companies were hiding the harms it caused, the government took action. When we figured out cars were safer with seatbelts, the government took action,” said Haugen’s written testimony to be delivered to a Senate Commerce subcommittee. “I implore you to do the same here.”

Haugen will tell the panel that Facebook executives regularly chose profits over user safety.

“The company’s leadership knows ways to make Facebook and Instagram safer and won’t make the necessary changes because they have put their immense profits before people. Congressional action is needed,” she will say. “As long as Facebook is operating in the dark, it is accountable to no one. And it will continue to make choices that go against the common good.”

Senator Amy Klobuchar, who is on the subcommittee, said that she would ask Haugen about the Jan. 6 attack on the U.S. Capitol by supporters of then-President Donald Trump.

“I am also particularly interested in hearing from her about whether she thinks Facebook did enough to warn law enforcement and the public about January 6th and whether Facebook removed election misinformation safeguards because it was costing the company financially,” Klobuchar said in an emailed comment.

The senator also said that she wanted to discuss Facebook’s algorithms, and whether they “promote harmful and divisive content.”

Haugen, who worked as a product manager on Facebook’s civic misinformation team, was the whistleblower who provided documents used in a Wall Street Journal investigation and a Senate hearing on Instagram’s harm to teen girls.

Facebook owns Instagram as well as WhatsApp.

The company did not respond to a request for comment.

Haugen added that “Facebook’s closed design means it has no oversight — even from its own Oversight Board, which is as blind as the public.”

That makes it impossible for regulators to serve as a check, she added.

“This inability to see into the actual systems of Facebook and confirm that Facebook’s systems work like they say is like the Department of Transportation regulating cars by watching them drive down the highway,” her testimony says. “Imagine if no regulator could ride in a car, pump up its wheels, crash test a car, or even know that seat belts could exist.”

The Journal’s stories, based on Facebook internal presentations and emails, showed the company contributed to increased polarization online when it made changes to its content algorithm; failed to take steps to reduce vaccine hesitancy; and was aware that Instagram harmed the mental health of teenage girls.

Haugen said Facebook had done too little to prevent its platform from being used by people planning violence.

“The result has been a system that amplifies division, extremism, and polarization — and undermining societies around the world. In some cases, this dangerous online talk has led to actual violence that harms and even kills people,” she said.

Facebook was used by people planning mass killings in Myanmar and in the Jan. 6 assault by Trump supporters who were determined to toss out the 2020 election results.

(Reporting by David Shepardson; additional reporting by Diane Bartz, Editing by Rosalba O’Brien, David Gregorio and Sonya Hepinstall)